This is the latest in the blog series co-authored by myself and the wonderful Sue Fletcher-Watson. After a brief hiatus we are back with our latest post – how to put together a great poster. Designing an attractive and informative poster is an incredibly useful skill, especially for early-career researchers. For any non-academic readers – most academic conferences and often internal events at Universities will have a poster session. These allow more people to share their research findings, in addition to scheduled speakers. So posters matter, but in our experience there is surprisingly little guidance given on how to do them well. So here are our dos and don’ts of putting together a killer poster.

- Plan ahead

Putting together a great poster takes some time and you will need to factor in a few days to get it printed as well. So plan ahead. In our experience people leave posters to the absolute last minute (you know who you are). The result is usually something that looks haphazard and counterintuitive. There is also that mad panic and rush to the print shop…which is never open when you need it to be… and then you almost miss your flight… which is always best avoided.

Make sure you check whether it needs to be landscape or portrait, and what size the presentation boards will be. You don’t want a poster that’s too big for your board! The conference organisers will be able to tell you this.

A great poster is something you can come back to many times – it can be easily shared online, re-used at multiple events, and it might end up being displayed on your department walls – so it is worth doing well. When done properly, a poster should be easy to present, easy on the eye and it may even win you a prize or two J. So plan it properly.

- You can only tell one good story with a poster

In a paper you may have 2 or 3 ongoing stories, or main findings that the manuscript is constructed around. This doesn’t work for a poster. A poster needs a single key message that you want to get across. Make sure that this features in the title. We both prefer titles that convey the story or finding you’re reporting on, rather than emphasising the method or approach.

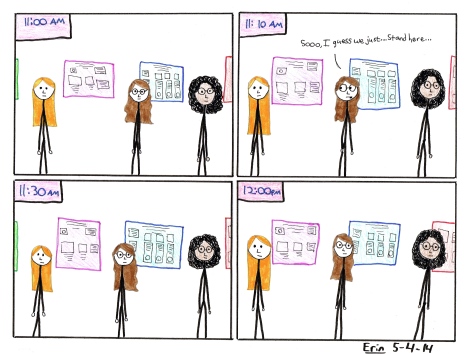

People will only be at your poster for a few minutes (at best). You might not be there or available to explain it to them. It will likely be crowded and noisy. All of this means that you need to boil down the message to a single narrative and explain it well in a few points. When Sue does a poster, she always tries to have two or three bullet points that set out the essential background and central research question. These are matched with two or three bullets to summarise the conclusions. It should be possible to understand the study by reading just these sections. The viewer can quickly and easily decide if they want to linger by your poster board, to get more detail on methods or results. The first step in designing a great poster is choosing this central narrative and presenting it neatly and briefly.

- Select the key pieces of information, and make them pop

Avoid large amounts of text. Graphics are a great way of conveying a lot of information in a small space. Don’t just paste in figures from your paper: these will probably need amending or focussing to fit the tight narrative that you have adopted for the poster.

Think about what will be central – literally, right in the middle – in your poster. This is the prime space for key graphics and findings. Construct the rest of the poster around this. You can also use colour to make sure important details are easy for the reader to spot. If you’re using, say, navy blue text on an off-white background, maybe you could reverse these colours for your conclusions so that they stand out from the main text?

- Imagine you are there… what visual aids would help?

Trial run your poster. You would never imagine giving a talk that you hadn’t practiced (would you?!), and you need to apply the same logic to your posters. Show lab mates or departmental colleagues who are not familiar with your study. Can you use the poster to explain the study in about 3 minutes? Are there visual aids or diagrams that might help? Going through this process is vital to make sure that your poster actually works.

- Don’t be constrained by the template

Many institutions have set templates which are approved by corporate people somewhere (I guess). Our advice is to not let this constrain how you display your poster. You are the person who will have to stand next to this thing and use it. As long as you have the right logos and basic palette, and it is otherwise a brilliant poster, you should get away with it. And this is far preferable to a poster structure that simply doesn’t work. A common poster template is a landscape organised into three columns. If you add information to these in a traditional sequence of background – research question – methods – results – discussion, you can often end up with interesting graphs at the bottom or off to one side. Don’t be afraid of going a little off-piste if this helps you get your message across. We won’t tell corporate if you don’t…

- If you don’t have an eye for colour then ask someone who does

Finally, if you have bad taste when it comes to colours (again… you know who you are) then ask someone to help you. Try and avoid clashing colour combos, needlessly vivid and flashy figures. Posters are an inherently visual medium, so get the colours right. Think about what logos you have to have on the poster, and pick a palette which compliments at least one of them. An easy fix is to go with different shades of the same colour – blues and greens work well – rather than mixing up your colours too much.

To round this off, here is a poster – this one made by Sue which she is pretty pleased with.

And there you have it. Investing time to make the poster good is worth it. You will enjoy the conference much more, you might win a prize, and it will look great on the lab wall once you’re done. So plan ahead, focus the message, get some good graphics… and be a great scientist!

Rather than attempt a single blog covering all aspects of grant-writing, the wonderfully talented

Rather than attempt a single blog covering all aspects of grant-writing, the wonderfully talented